Measuring the impact of technology

In the humanitarian space, monitoring and evaluating the impact of an intervention has long been the norm. For example, what is the impact of safe water on community health outcomes? How do cash distributions contribute to gender dynamics in the home? How does improved food security affect community conflict dynamics? The list goes on.

In the technology for development space, there is the assumption that technology improves efficiency or scale and reduces costs. But does it? Even more importantly, why should we care?

What Mercy Corps is doing

Mercy Corps’ Technology for Development (T4D) team is applying the same practices of monitoring and evaluation to our tech-enabled programs. By building the tools and processes to evaluate the impact of technology, we hope to speak specifically and quantitatively to the improvements resulting from technology. Because we look at our programs just like more traditional humanitarian programs, we apply similar monitoring and evaluation techniques to understand these highlighted impacts in our programs. We also have an additional benefit that comes with technology: digital monitoring tools. A combination of back-end data that technology brings and traditional evaluation practices allows us to uphold proven standards while evaluating new ideas of impact, or digging into the impact that technology actually has on programs and work processes around Mercy Corps.

Our monitoring and evaluation focuses on four key areas of impact and is supported by various tools:

Reach and scale

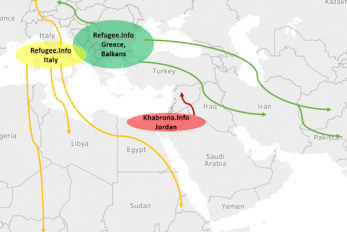

Digital tools help us reach larger populations of participants — beyond the people we can reach through in-person, on-the-ground interactions and in geographies broader than the specific communities where our field offices are located or focus on. For instance, migrants in Europe are sharing information we provide with their families, friends and personal networks in destination and home countries, spreading verifiable information about migrating to people we might not have otherwise been able to reach through in-person interventions.

To measure scale, we can look at a digital tool like a Facebook page designed to give refugees information. Through the back-end analytics, we can highlight our scale and engagement with the information that is spanning the digital globe. Information that is designed for people in Italy is being shared back to Nigeria, Eritrea, Afghanistan, and Iran. Not only this, but we can see how information is being shared beyond our target audience through their own networks around the world, particularly along migration routes. This in turn feeds into other analysis and assessment tools used for program decision-making.

Time and process efficiency

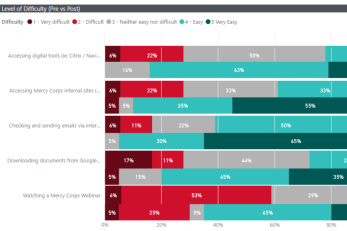

In pilot programs that integrate technology into our existing work, we’re collecting pre- and post-participation surveys so we can not only measure efficiency gains or improvements in impact, but also attribute the source of those improvements to specific technologies. For example, in field offices where we’ve installed Cisco Meraki WiFi networks, we are able to show how internet speed has improved people’s ability to do everyday tasks. In the chart below, we can see the decrease in red scores, indicating a reduction in people reporting difficulty.

To measure efficiency, we create pre/post surveys for our own process flows to help us understand the time for different steps in data collection through analysis before implementing an advanced analytical tool. Quantitative data collection can be useful when determining changes in process or time accounting for technology. We can use surveys to supplement data flow maps that are typically part of technology advisory and engagement.

Program quality improvement

Beyond efficiency and time savings, we are also measuring how new processes and technologies are helping improve program quality. In the case of our beneficiary registration tech platform, we can measure improvements in program processes and data flows that can help other teams see how the platform can be useful to them.

To measure program quality improvements, Key Informant Interviews have been immensely helpful, particularly when working with staff in positions interacting the most with the technology. They can speak to challenges faced before, during and after technology adoption, and contribute to lessons learned. We have learned about tech platforms can automatize steps, improving efficiency but also reducing the risk of error.

Comparative program impact

Where possible, we’re assessing how the addition of technology is making a difference by comparing results from tech-enabled programming and similar programs without a tech element. For example, in Iraq, we’re piloting virtual reality (VR) to complement our psychosocial support for conflict-affected youth. Our program participants are using VR for guided meditation activities, such as walking down a path surrounded by butterflies and flowers, or standing in a tranquil lake while speaking with a facilitator. We designed the monitoring and evaluation to include participants using VR, while others are getting access to psycho-social therapy through in-person or group activities without VR, allowing us to understand the outcomes that VR has for the children.

In cases where technology is incorporated into other programs, it is possible to analyze tech programs compared to their non-tech equivalents. While we have not implemented a fully randomized control trial, we can use this as a way to see whether VR is contributing to additional well-being outcomes in comparison to those who are not using the technology.

Bringing tools together

Integrating these various techniques may be obvious, but by adding in traditional monitoring and evaluation techniques to technology back-end analysis, we can tell a more compelling story. Through back-end data from office WiFi installations, for example, we can see the overall number of users, the bandwidth per user and use that information to set internal benchmarks to determine the overall health of the networks.

With surveys, interviews, or focus group discussions, we can show how a tool impacts people by asking about people’s workflows and use of the internet. We can better understand how improved bandwidth leads to ease of performing daily tasks. Before the new WiFi, 100% of users in Kenya and Guatemala reported difficulty watching a Mercy Corps webinar. Now, 92% of users report they can watch webinars without problems. Imagine what that means in terms of connecting teams across the world. Additionally, in Uganda we found that loading tools used for daily work has decreased in time, and connecting to Skype calls has improved. Even more compelling, add in the interview responses: “It’s improved the relaying and receipt of information significantly therefore reducing the frustrations with communication given the stability it now has and less interruptions with Skype calls.”

The “so what?”

How many times do donors ask for innovative ideas? How many times do you have an innovative idea that doesn’t meet an exact proposal? Ultimately, when we can provide evidence of the impact of technology specifically, we can further advocate for the use (or disuse) of technology.

The first value to measuring technology is to provide specific evidence to a donor or other stakeholder. Mercy Corps has seen how the data we collect about technology excites donors, partners, and peer agencies, leading to eventual expansion of adoption of these tools and techniques. Additionally, Cisco (T4D’s primary partner) has found the information to offer additional insight into the impact of their Meraki equipment donations. More broadly, donors often ask for innovative solutions using technology, but it is important to understand the role technology has compared to the broader program activities. What was it about a given technology that brought about change?

Technology is a growing trend in the development space. The possibilities of tech solutions are endless and often offered as a shiny solution. It is important to figure out if it is merely hype or a viable, practical solution. By trialing new technologies, and figuring out if they work and why, when compared to a non-tech solution, we can speak clearly about the value-add of those technologies. It doesn’t have to be all new technology. By gathering evidence and understanding the implications of technologies like social media, or WiFi provision, we can actually use the evidence we collect and lessons learned to advocate for policy change, or influence research agendas.

Finally, the measurement of technology can help support teams not currently working with technology to incorporate tools to help understand the potential for future tech use. New programs have access to technology landscape assessment tools, program reporting tools from technology trials, and guidance materials and stories to inspire use-cases of technology. Using these tools and frameworks, we see the digital landscape across Mercy Corps become clearer. This all in turn, increases the culture of technology at Mercy Corps as more people start talking about it, using the tools, and experimenting in their own space.

Next steps

With these metrics and evaluation activities, we’re building a body of knowledge around how to measure and learn from a number of different technology work streams. This helps us evolve and refine our tools as we replicate and scale successful projects.

We’ll continue to measure and test the effectiveness of our tools to learn the precise value each technology adds for our teams and the people we help, and to better understand where and why we may not have achieved the results we anticipated.